Automatic evaluation of Corona rapid tests

Recently, the use of rapid antigen tests for the detection of COVID-19 infection has gained popularity. These tests can provide rapid results compared to PCR tests. However, manual evaluation of these tests can be time-consuming and error-prone, especially in large-scale testing scenarios. To address this issue, a project has emerged as part of the development of the Smart Health Care Platform with the goal of automating the evaluation of rapid antigen tests.

In order to accomplish this, a so-called round-timer was constructed. In this the tests are thrown in, which were provided with a sample of the test persons to be tested. After the specified waiting time has elapsed, the round-timer automatically takes a photo of the test cassette. My task now was to develop the software including the image recognition AI, which automatically evaluates this photo.

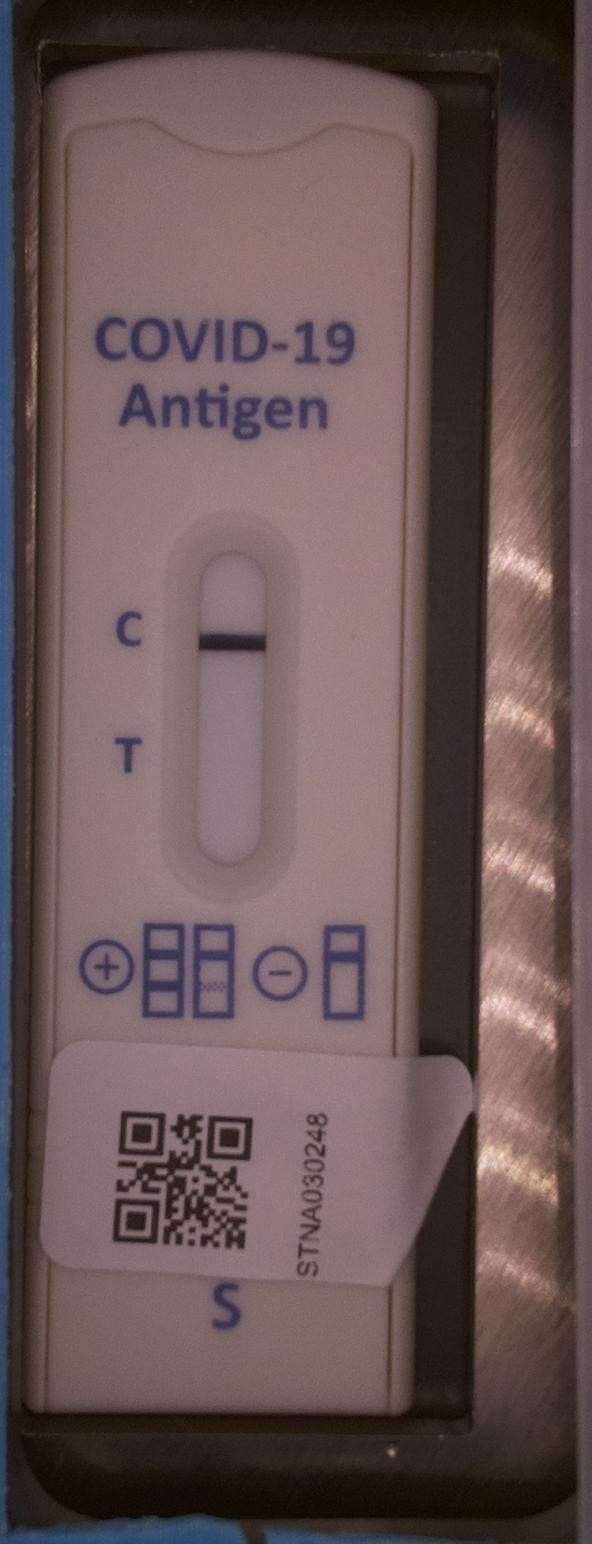

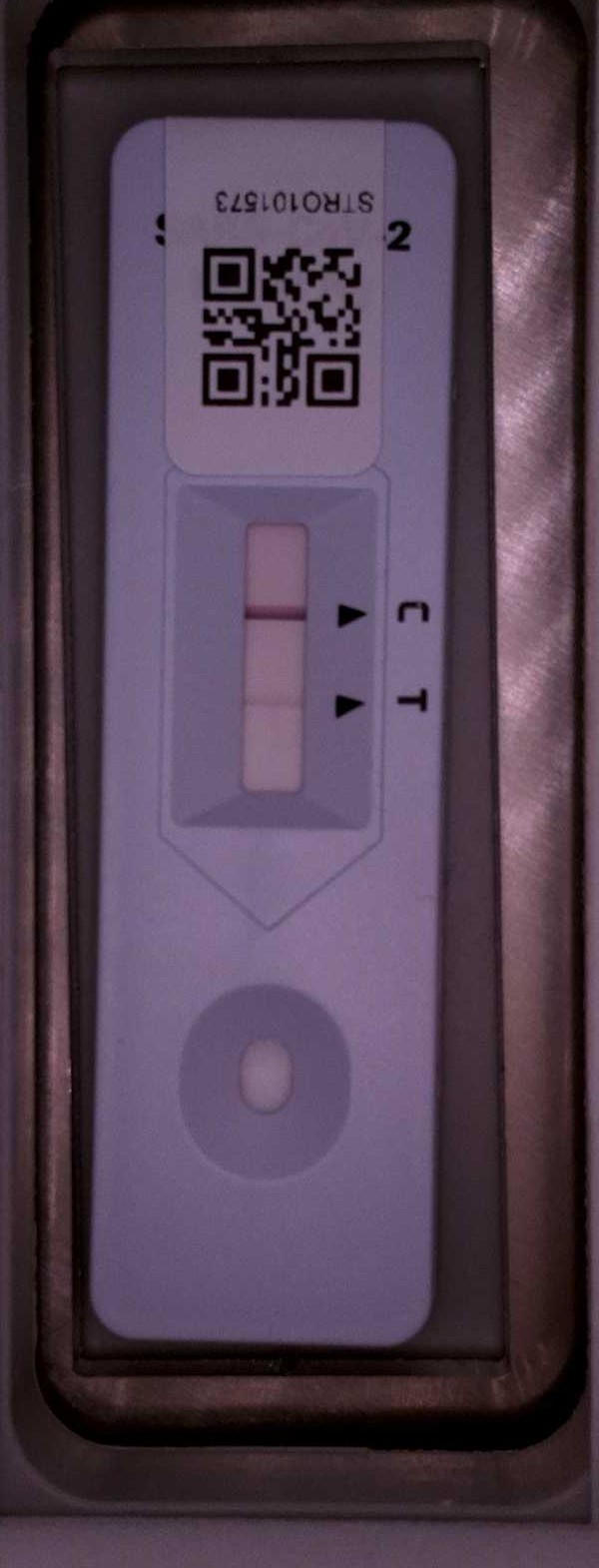

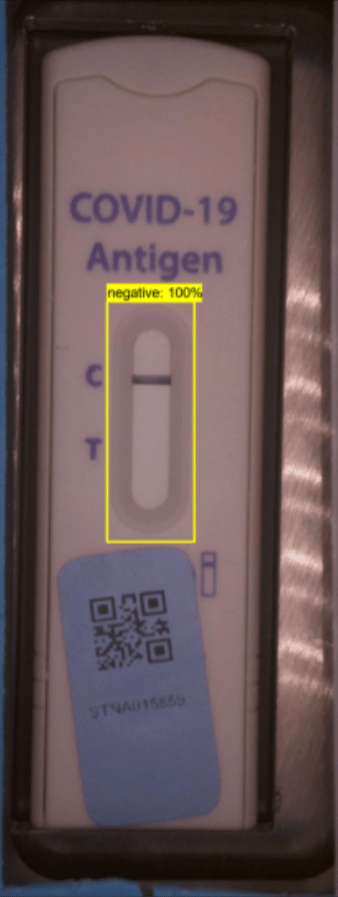

On the left is an image of such a round-timer, on the right is an image of a rapid antigen test recorded from a round-timer.As image recognition AI, I first used the pretrained neural network VGG-19, which I had fine-tuned to images of antigen rapid tests. For each rapid test image, it should output the probability of the test being positive, negative or invalid. Positive and invalid tests were then shown to a human for control. However, problems arose with this approach due to the fluid boundaries between the different states (a test can also be very slightly positive) and the inability of the model to correctly classify images of tests from different manufacturers. Therefore, I had to refine the model even further.

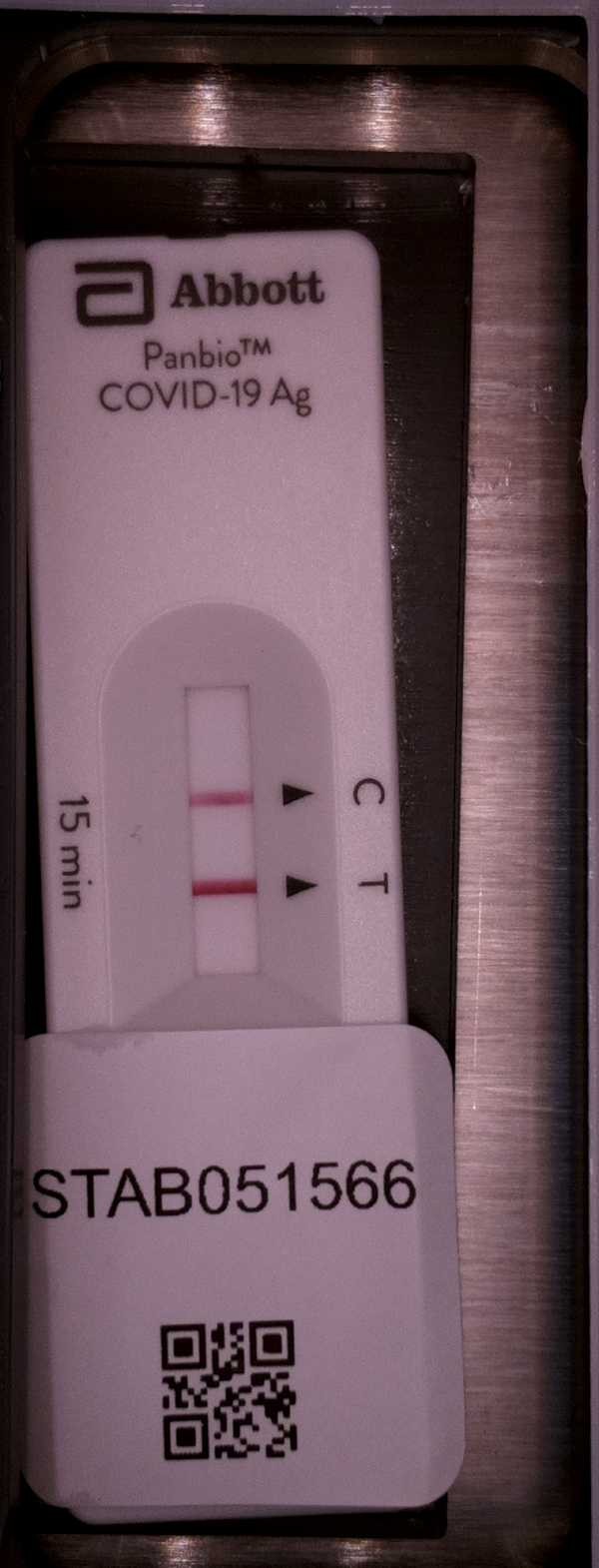

On the left, there is a test that is not negative, but also not clearly positive. In the middle is a test from another brand where the AI had difficulty evaluating the test correctly. On the right is a test where the evaluation window is smeared.In the second approach, I improved the AI model for image recognition by making it more robust to the smooth transitions between states and new manufactures. To do this, I first combined the previous model with another model for object recognition. I trained this new model only to recognize and cut out the test window of tests from all possible manufactures. I now trained the old model to recognize only from the test window whether the test is positive, negative or invalid. Thus, it no longer has to look at the entire test, and test from different manufactures no longer make a difference because the test windows are always set up the same way for all manufactures. The result was a more robust model that can effectively handle tests from new manufactures.

To solve the problem of fluid boundaries between states, I added two additional classes: smeared and slightly positive. So now I trained the model to classify a test as positive, negative, invalid, smeared, or slightly positive based on the test window. Tests that are classified as invalid, positive, smeared, or slightly positive must then be manually reviewed by humans to ensure safety. Only tests that are clearly negative are evaluated completely automatically.

I also use preprocessing techniques such as conversion to grayscale and augmentation techniques such as rotation and flipping to further increase the robustness of the models. This can negate the effects of different lighting conditions and light sources.

In this form, the model has demonstrated over 97% accuracy. It has been running for over half a year, relieving healthcare workers of the burden of evaluating hundreds of negative tests every day.